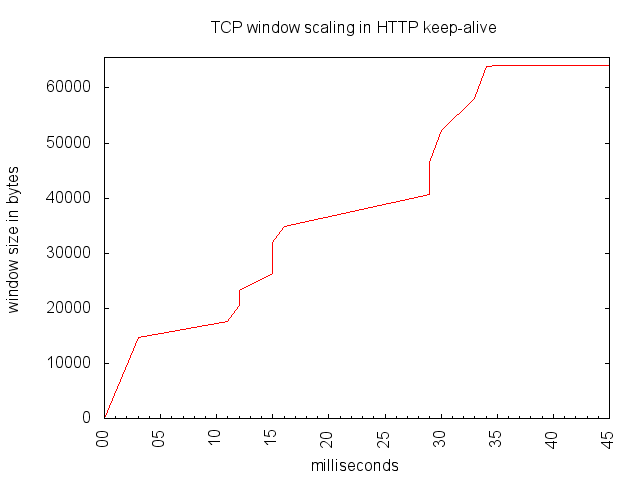

The graph below is a client side tcp window scaling (when the client downloads the HTML sent by the server). The client is a linux box running elinks, and I simply took a tcpdump of the connection between the client and the server.

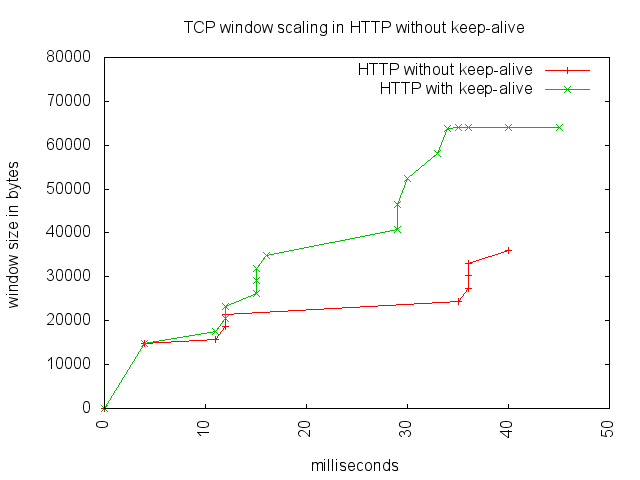

It's interesting to compare the curve above with the same connection without keepalive:

It's interesting to compare the curve above with the same connection without keepalive: Keepalive transfers more data, making the window scale faster. In the red connection, only 10,290 bytes of HTTP are received by the client. But in the green one, using keep-alive, 50,814 bytes are received in the same amount of time.

Keepalive transfers more data, making the window scale faster. In the red connection, only 10,290 bytes of HTTP are received by the client. But in the green one, using keep-alive, 50,814 bytes are received in the same amount of time. The goal ? Figuring out the good kernel parameters to optimize response time in a full HTTP environment. Almost all the systems interactions inside my work infrastructure are performed via HTTP REST APIs. Optimizing the TCP stack between servers becomes really interesting when building a page requires several HTTP request to multiple servers.

But the real goal is to write a new article in GLMF ;)

Another one: the graph below measures the window size on a nuttcp server between 2 ubuntu boxes (the nuttcp server being a VM). Both run 2.6.32 and no optimization other than the default Ubuntu ones has been done.

In this last graph, the TCP window scales all the way up to 195,200 bytes. That's 3 times the maximum window size defined by RFC 793. This is made possible by RFC 1323 and its Window Scale option.

In this last graph, the TCP window scales all the way up to 195,200 bytes. That's 3 times the maximum window size defined by RFC 793. This is made possible by RFC 1323 and its Window Scale option.

The window scale option defines a 16 bits field used to left-shift the window field of the tcp header. So if you have a window size of 16 bits set to one (value 65535 Bytes), then the window scale option allows to grow that to a 32 bits window size (or 4GB)

$ echo $(($((2**16)) << 16))

4294967296

Now how useful is that ? Well, the window size represents the size of the receiver's buffer communicated to the sender. But the role of the buffer is essentially to store data until the application can consume it (on the receiver's side), or until an ACK is received (on the sender's side).

The maximum amount on data that can be travelling on a network at a given time is called Bandwidth Delay Product (BDP). BDP is calculated by multiplying the bandwidth of the link with it's round trip time.

Example with a 50Mbips link over a WAN:

Bandwidth = 50 Megabits per seconds = 50*10^6 bits / seconds

RTT = 2.7 milliseconds = 0.0027 seconds

BDP = Bandwidth * RTT

BDP = 50*10^6 * 0.0027 = 135000 bits = 16875 bytes

(we multiply bits/seconds with seconds, so we obtain bits)

So 16,875 bytes is the maximum quantity of data that can be traveling at a given time between my two nodes when the link is full. Knowing that, that raises 2 questions:

1. Why is my window growing all the way to 195,200 on the graph above ?

2. How much margin does the buffer need to operate properly ?

And, I would add a third one, just for fun:

3. What defines the rate of growth of the window ?

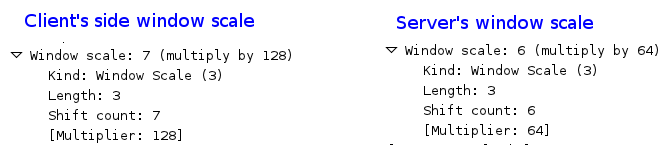

I have a beginning of answer for (1): the window scale option is a negotiation. Each endpoint announces what it support in the first packet is send. The client sends it in its SYN packet, and the server announces its own in the SYN,ACK packet.

The capture below displays the window scale options for the client and the server:

The server announces a maximum window scale of 2**16 << 7 = 8,388,608 Bytes, but the server replies with a maximum of only 2**16 << 6 = 4,194,304 Bytes. Regardless, this is a lot larger than the maximum of 16,875 bytes above. So why is it growing so large ? ... more reading to do I guess :)